LLM-Orchestrated Upsell & Service Automation

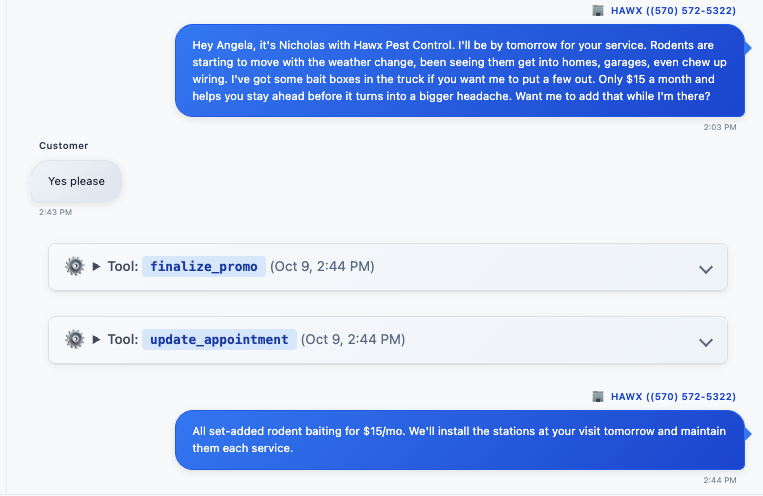

LLM-Orchestration in action, with tool calls and reasoning

24/7 upsell & service agent, not just a “bot”

We started with a very simple idea: send customers an SMS the night before their service asking if they wanted a one-time mosquito treatment. The first version was entirely human-driven: replies went into a queue that our customer care team in the Philippines handled when they came online around 6–8 a.m. The problems showed up immediately. Customers were replying to what looked like a personal text from their technician, then hearing nothing back for 12 hours; in many cases, the service had already been completed by the time we responded. We were losing conversion and creating a jarring, low-trust experience.

Bringing in LLMs, the first obvious win was coverage: an agent that could respond instantly, 24/7, to “yes/no” upsell replies and basic questions. But we quickly realized most conversations weren’t clean yes/no decisions. Customers would say things like, “No thanks, but can you help with XYZ?” or “Actually I need to reschedule tomorrow’s visit.” Some of those were safely automatable; others needed a human. So we designed the agent with explicit escalation paths: when it hit something out of scope, it didn’t just silently hand off the thread — it told the customer, apologized for the limitation, and let them know a manager or care rep would follow up, with logic that behaved differently depending on time of day and staffing.

Rescheduling turned out to be the dominant “non-upsell” use case and one of the hairiest operationally. Tight technician routes are great for efficiency and terrible for last-minute changes. To make rescheduling a first-class part of the experience, we exposed scheduling tools to the agent: query routes, find alternative time windows, move appointments, and add notes, all while respecting constraints from our routing engine. That turned the system from a pure marketing upsell bot into a genuine service co-pilot that could actually remove friction for the customer rather than just selling to them.

From one giant prompt to a multi-agent system

The first version of this lived behind a single, giant system prompt trying to do everything: write outreach, decide if we should send it, handle replies, and escalate. That didn’t last long. A good example: some customers used our outreach as a natural moment to say, “Actually I want to cancel my subscription.” Those are the cases where you almost wish you hadn’t texted them at all—particularly in fall and winter when pest pressure is low and people are already questioning why they’re paying monthly.

Operationally, the math looked like this: if you had 500 appointments tomorrow and you messaged all 500, maybe ~10% (50 people) bought the upsell. But a handful – say 7–8 – would churn because you reminded them you exist. The question became: how do we still get roughly those 50 conversions while reducing the total number of sends and avoiding obvious churn risks? In other words, can we shrink the top of the funnel while holding the bottom of the funnel flat?

We introduced a separate “judge” agent whose only job was to decide whether we should send the outreach at all. It had access to rich customer context, including third-party churn scores, tenure, recency/frequency of visits, and recent communications. We asked it to do two things: (1) return a risk score and a binary yes/no on “should we send,” and (2) select a discrete primary reason when it recommended not sending (e.g., high churn risk, duplicate communication, just rescheduled). Early on, it went overboard—something like 80% of customers were flagged as likely churners. We tightened the rules (e.g., explicitly handling duplicate communications when customers had just spoken to us or had back-to-back appointments) and calibrated thresholds.

By the time it settled, we were cutting out ~8–10% of potential sends. When we inspected the suppressed list, they were nearly all customers we agreed we shouldn’t ping. And crucially, even though we sent fewer messages, we only saw about a ~2% drop in total conversions. In practice, we kept almost all the upside (upsells) while avoiding the most obvious downside (self-inflicted churn and customer annoyance).

split up system prompts into manageable, reusable chunks

Personalized outreach, composed overnight

Once we saw the value of a “don’t-send” judge, we realized there was no reason the initial outreach had to be generic either. Using off-peak pricing on some of the models, we started running a nightly batch process. Around midnight, a dedicated “composer” agent would generate a custom outreach message for each eligible appointment. We gave it structured guardrails but also full access to customer context (history, service type, season, region) and simply asked: “Write the highest-converting message you can for this customer and this offer.”

That personalization moved the needle twice. Quantitatively, it bumped conversions another ~3–4 percentage points. Qualitatively, the messages just felt more human. When we read them back, they sounded like a tech who actually knew the property and the customer history, not a marketing template. A subtle but telling signal: total response volume went up even though many replies were “No thanks.” That’s actually a win — it means the message felt personal enough that people bothered to respond, not just ignore it as another blast.

By the end, the outbound orchestration looked like this:

Agent 1 – Composer (runs overnight): generates a tailored outreach message per appointment using rich customer context.

Agent 2 – Send-gate / Judge: inspects the customer, message, and history to decide if we should send at all, and records a concrete reason when the answer is “no.”

Agent 3 – Conversational agent (real time): handles replies, executes tools (reschedule, add notes, etc.), and escalates with clear expectations when out of scope.

This multi-agent split made the system more reliable and much easier to evolve.

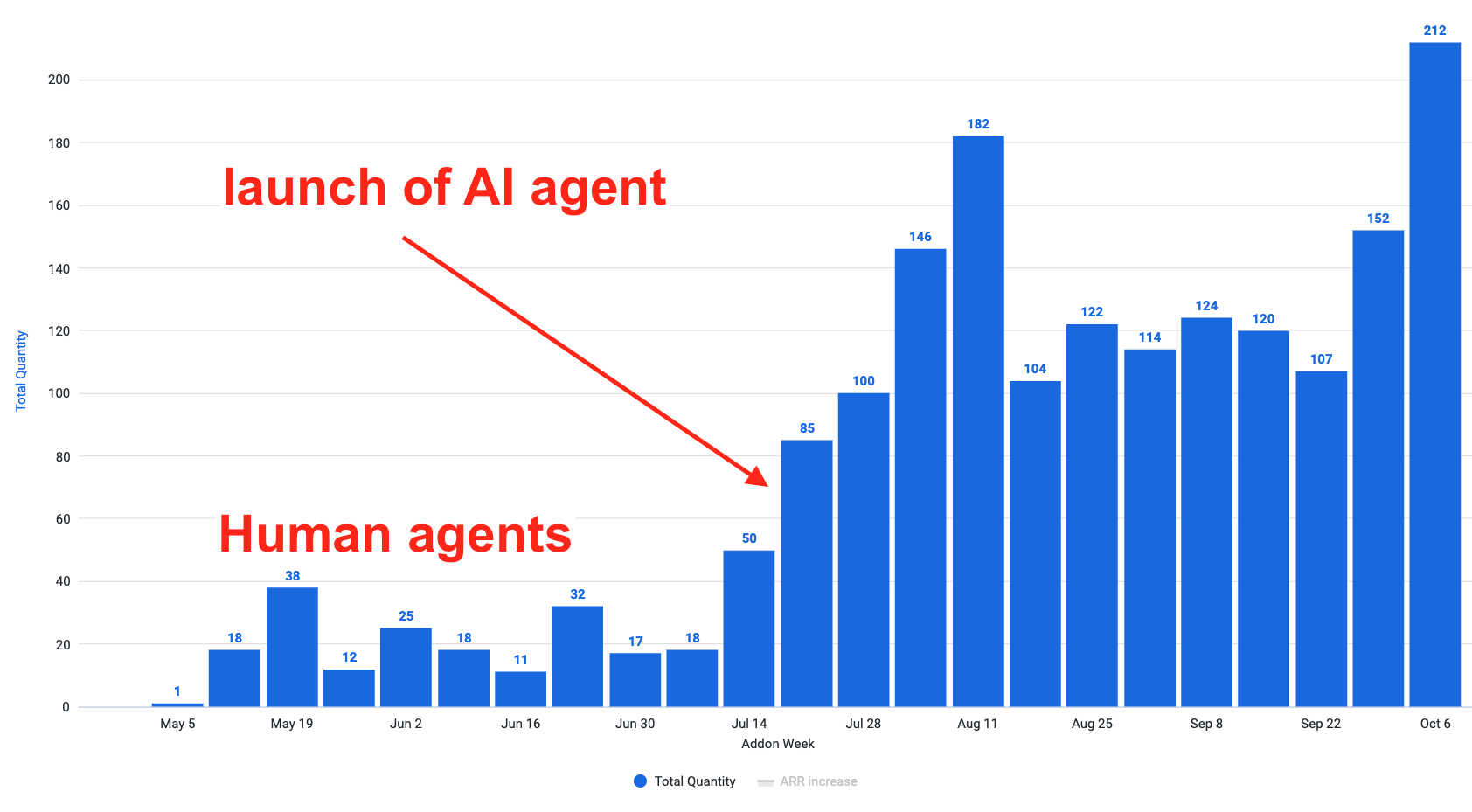

From next-day upsell to a true cross-sell engine

Once the core loop was working for “tomorrow’s appointment + one-time mosquito,” the next question was: why stop there? We have multiple add-on services (mosquito, rodent boxes, etc.), each with seasonal and geographic constraints, plus different revenue profiles. We wanted to move from a single-offer campaign to a proper cross-sell engine.

We added an offer-selection agent with two responsibilities:

Eligibility: Given a customer and their upcoming (or recent) service, determine which offers they technically qualify for. Do they already have this product? Is it out of season? Are they in a geography where this service is even available? That filtered out nonsense offers early.

Ranking & choice: For the eligible set, select the “best” offer for this customer and this moment. That meant balancing things like expected revenue, local context (e.g., nearby rodent issues), weather, and service type, then returning a single recommended upsell.

That agent became a shared endpoint. We first wired it into our SMS pipeline so the overnight Composer wasn’t just writing better messages, it was writing better messages for the right offer. Just as importantly, we exposed the same endpoint internally for our human teams.

Turning customer care from cost center to profit center

Hawx gets 1,000–3,000 inbound customer care calls per day. Historically, that function had been treated as a pure cost center: the goal was to resolve issues and move on. But psychologically, the end of a successful call is one of the best possible moments to ask for an add-on — the customer has just had a positive interaction and gotten what they came for.

We saw an opportunity to use the same orchestration we’d built for SMS to empower our human reps:

The system already had real-time call transcripts and full customer context.

The offer-selection logic could pick the best add-on for this specific customer.

Our existing message-generation capabilities could be repurposed to create a short talk track for a human instead of a text message.

So we built a parallel agent flow for customer care. At the end of a call, the system surfaces: “Here’s the best offer for this customer right now, and here are three concise value props to use.” Reps didn’t have to guess what to pitch or how to phrase it — they could simply use the talk track or adapt it in their own words.

The impact was non-trivial. This team had generated essentially zero upsell revenue over the life of the business. After rollout, an average rep was closing 3–4 add-ons per day on a typical workload of 20–30 calls. The attach rate was high enough that customer care started to show up in revenue dashboards as a meaningful contributor instead of a pure expense line. And because it was powered by the same shared infrastructure we built for SMS, every improvement we made to eligibility, offer choice, or messaging benefited both channels.

Extending the engine to field technicians

The last channel we brought online was the field itself. Roughly half the time, when a technician shows up to spray a home, someone is actually there and talking with them. That’s an incredibly high-intent moment — but historically, Hawx lagged industry peers in technician-driven revenue. Most upsells were reactive: a customer would say, “I’ve been seeing this other pest,” and the tech might respond with, “We could do X for that.” There was no systematic, proactive motion.

Technicians also aren’t salespeople. They’re driving trucks, running tight routes, and trying to stay on schedule. Expecting them to memorize offers, eligibility rules, and value props is unrealistic.

We used the exact same offer-selection engine and content generation we’d already built and surfaced it inside the field app technicians were already using. When a tech pulled up an appointment on their tablet/phone, they would see:

The top recommended add-on for that stop, based on the same eligibility and ranking logic used in SMS and customer care.

A short, technician-friendly script with one or two things to say if the customer was home.

It didn’t turn techs into high-pressure sales reps; it simply removed friction and made it easy to have one thoughtful, relevant conversation when it made sense. And because it all rode on the same underlying orchestration, any improvement in the engine benefitted SMS, customer care, and field operations simultaneously.

Multi-channel impact on revenue & the business model

By the time all three channels — SMS, customer care, and technicians — were live and in early adoption, the combined impact was already meaningful:

SMS was the most mature, with solid, repeatable conversion rates that scaled with volume.

Customer care was mid-rollout, ramping from zero historical revenue to 3–4 add-ons per rep per day.

The field tech experience was just coming online, but techs were starting to adopt the prompts and see success.

Even at that “partially rolled out” stage, the system was generating on the order of $13,000 per day in incremental recurring revenue from existing customers. Annualized, that’s roughly $5M of additional revenue on a business doing ~$70–80M per year — purely by increasing attach rate and ARPU, not by adding more door-to-door sales headcount.

This was very intentional. The default mindset in the business was “more new sales”: hire more door-to-door reps, push harder on frontline acquisition. I pushed a different, more operator-y view: in a subscription model, raising average monthly revenue per customer by even $10 is massive. We already had a large, renewing base; the question was how to use technology to unlock more value from those relationships across every touchpoint — outbound SMS, inbound calls, and in-person service.

We layered in simple gamification — leaderboards for reps and technicians, clear goals, visibility into who was driving the most attach — so it wasn’t just an AI system running in the background, but something people could engage with and compete around. What started as an outsourced door-to-door sales–led growth model now had materially meaningful revenue coming from service and support channels that had previously been treated as pure cost centers.

It’s still early, and there’s plenty of runway left, but the orchestration has already started to reshape the business model: multiple channels contributing to growth, higher ARPU on the existing base, and a clearer path to scaling revenue without linearly scaling headcount. That’s the kind of technology-first, operator-style leverage I like building.